Nvidia’s next-generation GPU architecture is finally here. Nearly a year and a half after the GeForce RTX 20-series launched with Nvidia’s Turing architecture inside, and three years after the launch of the data center-focused Volta GPUs, CEO Jensen Huang unveiled graphics cards powered by the new Ampere architecture during a digital GTC 2020 keynote on Thursday morning. It looks like an absolute monster.

Ampere debuts in the form of the A100, a humongous data center GPU powering Nvidia’s new DGX-A100 systems. Make no mistake: This 6,912 CUDA core-packing beast targets data scientists, with internal hardware optimized around deep learning tasks. You won’t be using it to play Cyberpunk 2077.

But that doesn’t mean we humble PC gamers can’t glean information from Ampere’s AI-centric reveal. Here are five key things that Nvidia’s Ampere architecture mean for the next-gen GeForce lineup.

1. Ampere isn’t for you yet, but it will be

If you’re looking for specific details about GeForce graphics cards, well, keep waiting. Like the Volta and Pascal GPU architectures before it, Ampere’s grand reveal took shape in the form of a mammoth GPU built to accelerate data center tasks. Unlike Volta, however, Ampere will indeed be coming to consumer graphics cards too.

In a prebriefing with business reporters, Huang said that Ampere will streamline the Nvidia GPU lineup, replacing both the data center-centric Volta GPUs as well as the Turing-based GeForce RTX 20-series. The hardware inside each specific GPU will be tailored to the market it’s targeting, though. “There’s great overlap in the architecture, but not in the configuration,” Marketwatch reports Huang as saying when asked about how the consumer and workstation GPUs will compare.

2. Ampere jumps to 7nm

As widely expected, Nvidia’s Ampere GPUs are built using the 7nm manufacturing process, moving forward from the 12nm process used for Turing and Volta. It’s a big deal.

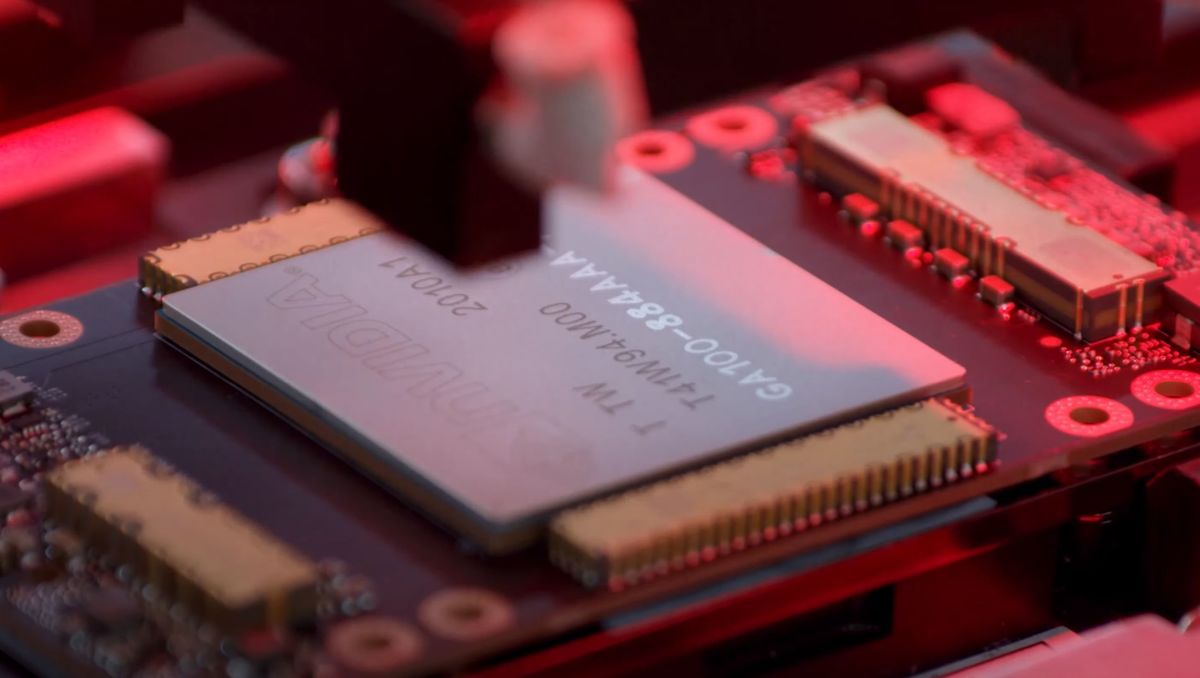

Nvidia

NvidiaThe Ampere GPU at the heart of the A100 is called GA100, a teaser video released by Nvidia shows.

Smaller transistors mean better performance and power efficiency. The “Navi”-based Radeon RX 5000-series graphics cards beat Nvidia to 7nm and the transition helped AMD’s offerings greatly increase their efficiency. While Radeon cards have run hot and power hungry for years prior, the 7nm Navi cards drew even with their GeForce counterparts in both performance and efficiency—no small feat. Looking back to Team Green’s own past, Nvidia’s transition from the GeForce GTX 900-series’ 28nm process to the GTX 10-series’ 16nm process resulted in huge performance gains.

In other words, history says we should expect wonderful advancements from Ampere-based GeForce GPUs.

3. Ampere squeezes in a lot more cores

The move to smaller transistors also means you can squeeze more cores into the same space. Whereas the Volta flagship, the Tesla V100, deployed 21.1 billion transistors, 5,120 CUDA cores, and 80 streaming multiprocessor clusters into its 815 mm^2 die, the new Ampere-based A100 crams 54 billion transistors, 6,912 CUDA cores, and 108 SMs into its 826 mm^2 die.

That’s a big leap forward, and more GPU means faster graphics cards. For reference, the GeForce RTX 2080 Ti has 4,352 CUDA cores in its 754 mm^2 die. Its successor might be downright bristling with cores.

4. Ampere’s AI brains got smarter

Volta and Turing introduced tensor cores to Nvidia’s GPUs. Tensor cores accelerate machine learning tasks, and in GeForce GPUs, they power the awesome Deep Learning Super Sampling (DLSS) 2.0 technology and “denoise” the grainy artifacts generated by real-time ray tracing’s light casting.

The A100 GPU utilizes third-gen tensor cores that greatly improve performance on 16-bit “FP16” half-precision floating point tasks, add “TF32 for AI” capabilities for single-precision tasks, and now support FP64 double-precision tasks, as well. It remains to be seen how (and potentially even if) the third-gen tensor cores get deployed in Ampere-based consumer GPUs, but with Nvidia pushing DLSS and machine learning so aggressively, it seems like a lock that next-gen GeForce GPUs will have leveled-up AI in some manner, especially if rumors about greatly enhanced ray-tracing performance prove true. More rays means more noise, and more noise means better denoising is required.

5. Ampere supports PCIe 4.0

Nvidia didn’t announce this for its DGX-A100 system, but Supermicro also revealed new systems powered by the Ampere A100 GPU, and that announcement confirms that the next-gen hardware supports the cutting-edge PCIe 4.0 interface. AMD’s Ryzen 3000-series processors were the first to embrace the new interface, which delivers a big speed boost over the PCIe 3.0 slots found computers for several years running.

Nvidia

NvidiaA DGX-A100 Ampere system, fresh out of Nvidia CEO Jensen Huang’s oven.

When it comes to graphics cards, the move may seem somewhat academic. Navi-based AMD Radeon 5700 graphics cards that support PCIe 4.0 aren’t any faster than they are in PCIe 3.0 systems, as our PCIe 4.0 primer explains, and generally, most graphics cards don’t come close to saturating the PCIe 3.0 interface yet.

That most matters, though. TechPowerUp’s testing shows that the fearsome $1,200 GeForce RTX 2080 Ti indeed gets a small, but measurable performance boost when running from a PCIe 3.0 x16 slot rather than a PCI 3.0 x8 slot, indicating that it’s approaching the upper boundaries of PCIe 3.0’s capabilities in multi-GPU gaming rigs. Running several GPUs in a mainstream, non-HEDT system splits one PCIe 3.0 x16 connection across the two slots.

If the Ampere-based RTX 2080 Ti successor indeed packs many more CUDA cores and a lot more graphics oomph, it could overwhelm PCIe 3.0 x8 connections. Deploying PCIe 4.0 skirts that roadbump. It also introduces a novel twist for system builders. Intel’s latest 10th-gen Core CPUs opted to remain on PCIe 3.0 rather than upgrading to PCIe 4.0. While Intel CPUs generally run slightly faster than their AMD Ryzen counterparts in games, if you plan on building a fire-breathing, no-holds-barred system with several high-end Ampere GeForce GPUs inside, opting for Ryzen and its PCIe 4.0 support could be the better move. Interesting!

[ Further reading: The best CPUs for gaming.]

What’s we still want to see

Nvidia

NvidiaAmpere is here, and soon it’ll be powering next-gen GeForce graphics cards.

Nvidia’s limited A100 announcement failed to reveal some specifications of key interest to gamers, most notably Ampere’s clock speeds and ray tracing performance. Faster clock speeds mean faster gaming performance, generally. More dedicated RT cores could greatly enhance the ray tracing capabilities of next-gen GeForce GPUs, meanwhile, reducing the steep performance penalty currently inflicted when you activate the gorgeous lighting effects in games—especially if paired with Nvidia’s new advanced tensor cores.

The GTC 2020 keynote and Nvidia’s associated documentation didn’t touch on either aspect, alas. Recent leaks and rumors suggest that GeForce Ampere GPUs will clock even higher than the speedy RTX 20-series, however, and deliver up to a 4x performance increase in ray tracing speeds. The source doesn’t have an established track record for accurate leaks, however, and you should always take rumors with a big pinch of salt. That said, all the extra space provided by the jump to 7nm gives Nvidia a lot of room to pack in more CUDA cores, more RT cores, or (hopefully) a mixture of both.

Bottom line: Nvidia’s next-gen Ampere GPU architecture is finally here, and even in data center form, there’s a lot for PC gamers to get excited about. Now the wait for Ampere-based GeForce graphics cards begins. Nvidia hasn’t uttered a peek about them but expect to see the new hardware later this year. With “Big Navi” Radeon GPUs and impressive next-gen consoles bringing bigger performance and ray tracing to AMD hardware in the coming months, Nvidia’s sure to want to drop a hammer on its rival’s ambitions.