When Apple finally released its M1 Max processor in October, the Internet predictably saw dark days for PC laptops. Some even felt bad for PC laptop makers being uncompetitive with the MacBook Pro for perhaps “years.” Those predictions may have to be retuned a bit now that Intel and Nvidia have both come out swinging at Apple, however.

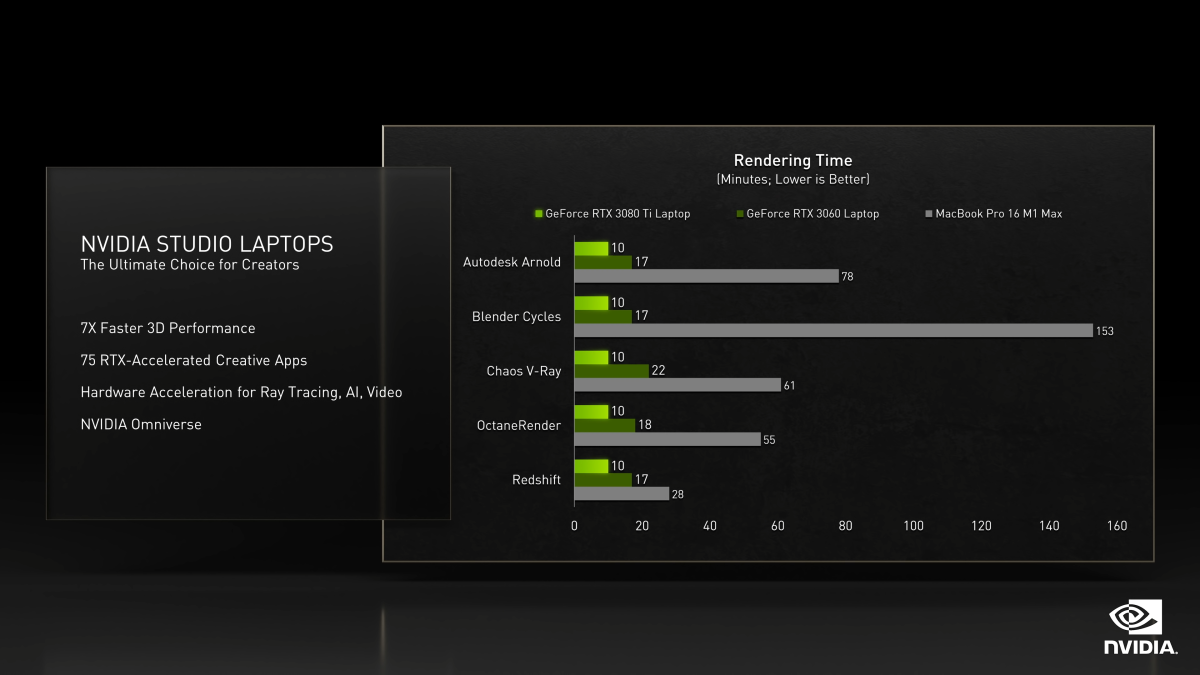

Nvidia was the first to step on Apple’s sneakers when it announced its new GeForce RTX 3070 Ti and GeForce RTX 3080 Ti Laptop GPUs. Nvidia casually compared not just its newest GeForce RTX 3080 Ti Laptop GPU against Apple’s fastest M1 Max, but also the far more pedestrian GeForce RTX 3060 Laptop GPU using Autodesk Arnold, Blender, Chaos V-Ray, OctaneRender and Redshift.

As you can see from the comparison with the MacBook Pro 16’s M1 Max, both the new GeForce flagship and the far blander RTX 3060 Laptop GPU simply crush the M1 Max. And by crush, we mean crush, because when a GeForce RTX 3080 Ti Laptop GPU takes 10 minutes to perform a render and a GeForce RTX 3060 Laptop GPU takes 22 minutes using Autodesk Arnold, versus 78 minutes for the M1 Max, it’s a beat-down. That’s an 87 percent decrease in rendering time for the RTX 3080 Ti vs. the M1 Max, and a 78 percent advantage for the RTX 3060. That’s a shellacking no matter how you count it for working creators, but it should be pointed out that many of these apps have long been optimized for Nvidia’s GPUs, giving GeForce a home field advantage.

Nvidia

For example, it’s not clear if the testing Nvidia did for Blender Cycles used the version that’s currently being ported to Apple’s M1 and Metal API. We’d guess not since the presentation would have been based on numbers likely prepared well before CES 2022 to meet approval for public dissemination. With Blender support still in pre-beta stage, it’s highly doubtful the Blender score is running an alpha version.

So is it fair if Nvidia shows off a stack of benchmarks arguably optimized for its GPU versus the unknown quantity of M1 Max support? It depends.

If your idea of a good time is to get into a yelling match on Twitter while wearing an Apple team jersey over the “unfairness” of Nvidia’s results, then it’s definitely not fair. If you’re a working professional who gets paid to shovel pixels in Autodesk Arnold, Blender, V-Ray, OctaneRender, or Redshift, then it’s most certainly a fair test, since the only thing you probably care about is how fast your hardware can make you money.

Intel steps up too

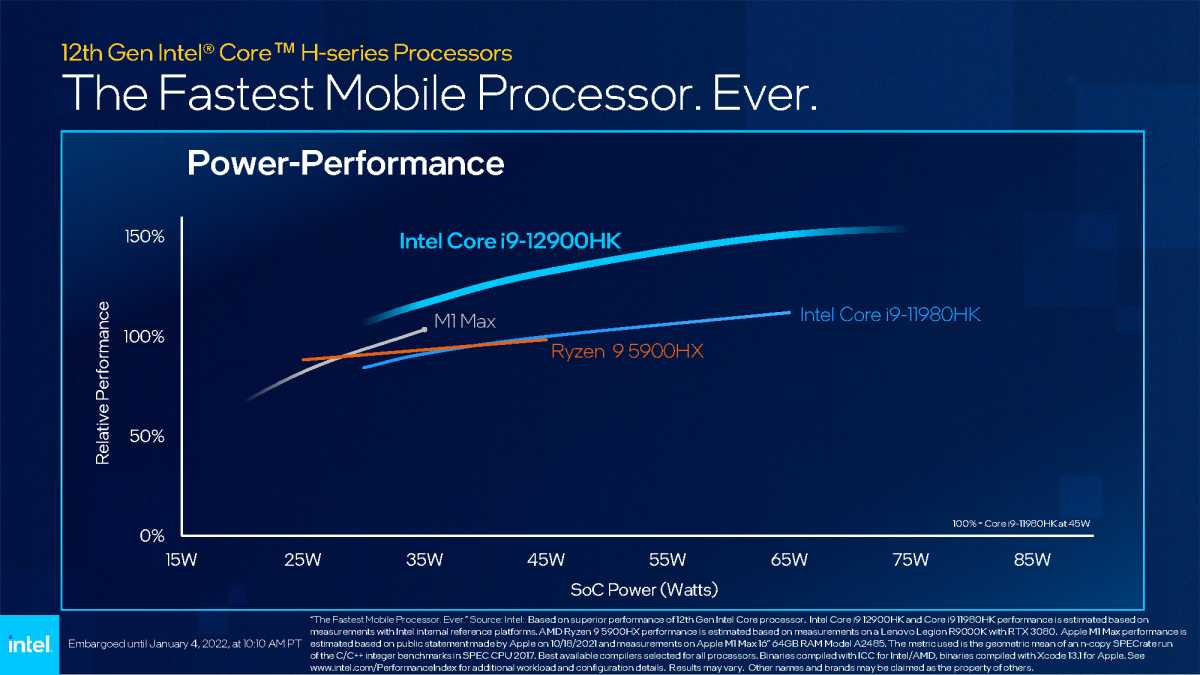

After Nvidia poked Apple in the nose a few times, Intel jumped into the ring later that morning by saying that its new 12th-gen “Alder Lake” laptop CPUs are faster than not only its older 11th-gen Tiger Lake H CPUs and AMD’s Ryzen 9 5900HX, but also Apple’s M1 Max. So yes, as the slide below says, the fastest mobile processor. Ever.

Intel

What’s that based on? Fortunately, responsible companies show their homework as Intel did. In fact, Intel shows way more of its work than Nvidia did in its video, which publishes the results, but no information about how it tested the laptops.

Intel says its performance results for the Apple M1 Max is estimated based on: “public statements made by Apple on 10/18/2021 and measurements on Apple M1 Max 16″ 64GB RAM Model A2485. The metric used is the geometric mean of an n-copy SPECrate run of the C/C++ integer benchmarks in SPEC CPU 2017.”

For the uninitiated, SPEC is published by Standard Performance Evaluation Corporation, an industry group that has come together to create various agreed-upon tests and proclaims itself a “beacon of truth for 30 years.” Members include a who’s who of tech companies including AMD, Apple, Intel, and Nvidia. You’re typically required to publish much of the fine print, including what was used to compile the executables for the test too.

In Intel’s case, it said it used ICC for the Windows laptops and Apple’s Xcode 13.1 for the M1 Max. To its credit (although some would say it’s just to avoid further Imperial entanglements), Intel discloses far more details on how it achieved its claim here.

Intel

Still, the upshot of Intel’s tests says that even at 28 watts or so of power consumption, it’s easily outperforming the M1 Max in a test even Apple has signed onto. As you push the wattage envelope of the Core i9-12900HK you’re looking at perhaps near 45 percent more performance than that M1 Max.

So, what should you believe? One problem with SPEC benchmarks, though sometimes based on actual application code, is the lack of relatability for consumers. They can be useful for computer science students arguing in the quad, but for most people they’re pretty esoteric. We’d probably want to see something we can relate to before determining if and how much of a beat down the new 12th-gen Alder Lake gives the M1 Max. It’s very hard to argue against a test published by a benchmarking group even Apple is a member of though.

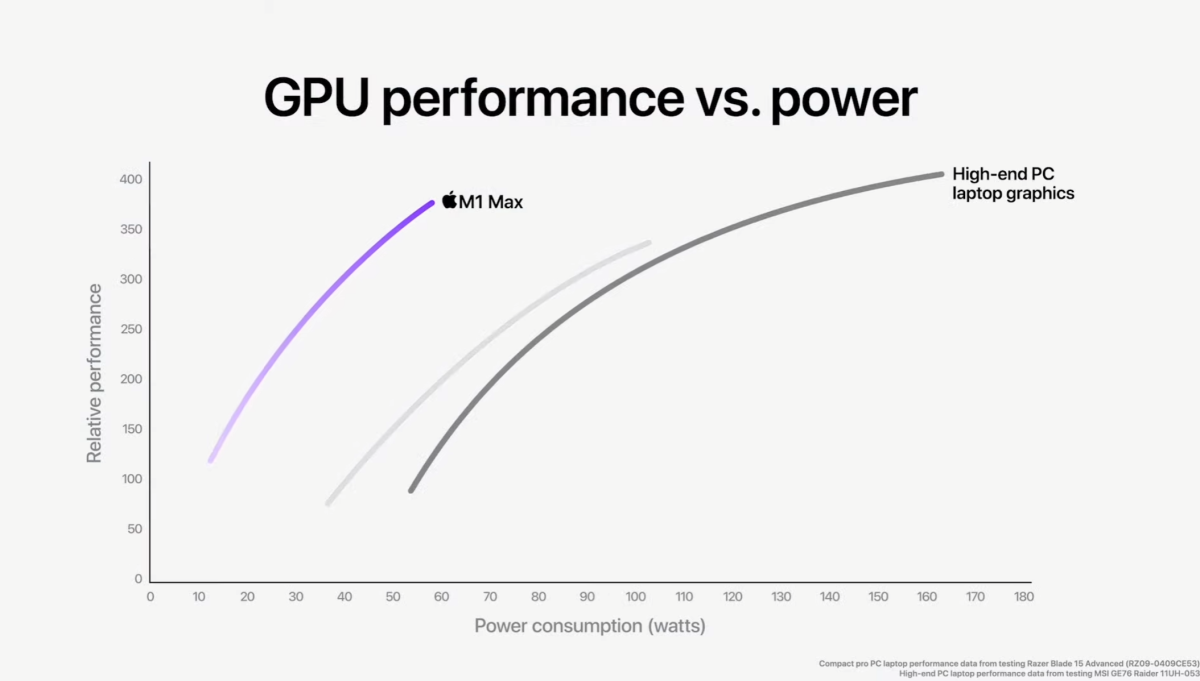

And it’s good Intel published its homework. We wish Nvidia would have said a little more about how it tested the laptops against the Apple M1 Max—did the workloads include ray tracing features that GeForce GPUs pack dedicated hardware for? But it’s hard to complain about what Nvidia did when Apple has been publishing results like the one below. This is a benchmark result Apple showed off for the M1 Max’s launch and, frankly, we still have no idea what Apple based it on. The dark gray line represents MSI’s killer GE76 Raider gaming laptop that’s actually—to Apple’s credit—displayed as being faster than the M1 Max. The lighter gray line is a Razer Blade Advanced, which is slightly slower than the M1 Max. Both are outfitted with a GeForce RTX 3080 Laptop GPUs.

Apple admits its slower than Nvidia’s GeForce RTX 3080 Laptop GPU, but it’s really signaling just how power efficient it is.

Apple

The M1 Max may lose or come close to Nvidia’s GPUs in raw performance, but Apple’s real victory is its power consumption. Apple’s M1 Max and its TSMC 5nm process is indeed impressive for the power it consumes. At the same time, just what the hell was Apple testing? We have no doubt the M1 Max is indeed efficient, but of the three “our bars are longer” presentations, Apple’s is the thinnest on actual details and mostly leaves you wondering just how it determined what it did. If you’re concerned over Nvidia or Intel being fair, you should be even more concerned about Apple’s claims.

In the end, consumers should always take any vendor’s claims with a grain of salt. Wait for independent reviews using tests that relate to what you actually do with your laptop before deciding what to buy.

One of founding fathers of hardcore tech reporting, Gordon has been covering PCs and components since 1998.