Extra and more products and solutions are getting edge of the modeling and prediction abilities of AI. This write-up presents the nvidia-docker device for integrating AI (Artificial Intelligence) software package bricks into a microservice architecture. The principal edge explored listed here is the use of the host system’s GPU (Graphical Processing Unit) resources to accelerate many containerized AI apps.

To realize the usefulness of nvidia-docker, we will start off by describing what type of AI can gain from GPU acceleration. Next we will existing how to implement the nvidia-docker tool. Last but not least, we will explain what resources are accessible to use GPU acceleration in your programs and how to use them.

Why applying GPUs in AI applications?

In the industry of artificial intelligence, we have two principal subfields that are utilized: device discovering and deep learning. The latter is aspect of a much larger family of device learning solutions dependent on artificial neural networks.

In the context of deep discovering, the place operations are fundamentally matrix multiplications, GPUs are a lot more productive than CPUs (Central Processing Models). This is why the use of GPUs has grown in new decades. In fact, GPUs are thought of as the coronary heart of deep understanding since of their massively parallel architecture.

Nevertheless, GPUs can not execute just any plan. Certainly, they use a particular language (CUDA for NVIDIA) to acquire advantage of their architecture. So, how to use and talk with GPUs from your purposes?

The NVIDIA CUDA technology

NVIDIA CUDA (Compute Unified Device Architecture) is a parallel computing architecture blended with an API for programming GPUs. CUDA interprets application code into an instruction set that GPUs can execute.

A CUDA SDK and libraries this sort of as cuBLAS (Essential Linear Algebra Subroutines) and cuDNN (Deep Neural Community) have been designed to converse easily and effectively with a GPU. CUDA is out there in C, C++ and Fortran. There are wrappers for other languages which includes Java, Python and R. For example, deep learning libraries like TensorFlow and Keras are based mostly on these technologies.

Why employing nvidia-docker?

Nvidia-docker addresses the desires of builders who want to include AI functionality to their programs, containerize them and deploy them on servers powered by NVIDIA GPUs.

The aim is to established up an architecture that will allow the progress and deployment of deep understanding designs in solutions available via an API. Therefore, the utilization rate of GPU sources is optimized by creating them out there to numerous software circumstances.

In addition, we profit from the advantages of containerized environments:

- Isolation of situations of just about every AI design.

- Colocation of a number of products with their particular dependencies.

- Colocation of the exact same model below a number of versions.

- Steady deployment of versions.

- Design general performance monitoring.

Natively, utilizing a GPU in a container demands installing CUDA in the container and giving privileges to access the system. With this in thoughts, the nvidia-docker resource has been produced, enabling NVIDIA GPU products to be uncovered in containers in an isolated and secure way.

At the time of creating this write-up, the hottest model of nvidia-docker is v2. This model differs significantly from v1 in the next approaches:

- Edition 1: Nvidia-docker is applied as an overlay to Docker. That is, to make the container you experienced to use nvidia-docker (Ex:

nvidia-docker run ...) which performs the steps (amongst many others the development of volumes) allowing for to see the GPU devices in the container. - Edition 2: The deployment is simplified with the replacement of Docker volumes by the use of Docker runtimes. In fact, to start a container, it is now vital to use the NVIDIA runtime via Docker (Ex:

docker operate --runtime nvidia ...)

Be aware that thanks to their distinct architecture, the two variations are not appropriate. An software prepared in v1 should be rewritten for v2.

Environment up nvidia-docker

The essential things to use nvidia-docker are:

- A container runtime.

- An available GPU.

- The NVIDIA Container Toolkit (main part of nvidia-docker).

Conditions

Docker

A container runtime is essential to run the NVIDIA Container Toolkit. Docker is the recommended runtime, but Podman and containerd are also supported.

The official documentation presents the set up method of Docker.

Driver NVIDIA

Motorists are essential to use a GPU product. In the situation of NVIDIA GPUs, the motorists corresponding to a specified OS can be received from the NVIDIA driver down load web page, by filling in the details on the GPU product.

The installation of the drivers is done via the executable. For Linux, use the following commands by changing the identify of the downloaded file:

chmod +x NVIDIA-Linux-x86_64-470.94.operate

./NVIDIA-Linux-x86_64-470.94.operateReboot the host equipment at the close of the set up to get into account the installed motorists.

Installing nvidia-docker

Nvidia-docker is out there on the GitHub project website page. To put in it, follow the installation manual dependent on your server and architecture details.

We now have an infrastructure that makes it possible for us to have isolated environments offering obtain to GPU assets. To use GPU acceleration in applications, a number of instruments have been created by NVIDIA (non-exhaustive checklist):

- CUDA Toolkit: a established of resources for developing computer software/systems that can perform computations making use of the two CPU, RAM, and GPU. It can be employed on x86, Arm and Electrical power platforms.

- NVIDIA cuDNN: a library of primitives to speed up deep mastering networks and enhance GPU general performance for significant frameworks such as Tensorflow and Keras.

- NVIDIA cuBLAS: a library of GPU accelerated linear algebra subroutines.

By applying these equipment in software code, AI and linear algebra responsibilities are accelerated. With the GPUs now noticeable, the software is able to deliver the details and operations to be processed on the GPU.

The CUDA Toolkit is the cheapest level selection. It offers the most manage (memory and guidance) to make customized applications. Libraries deliver an abstraction of CUDA operation. They allow for you to concentrate on the software enhancement rather than the CUDA implementation.

After all these factors are carried out, the architecture making use of the nvidia-docker company is all set to use.

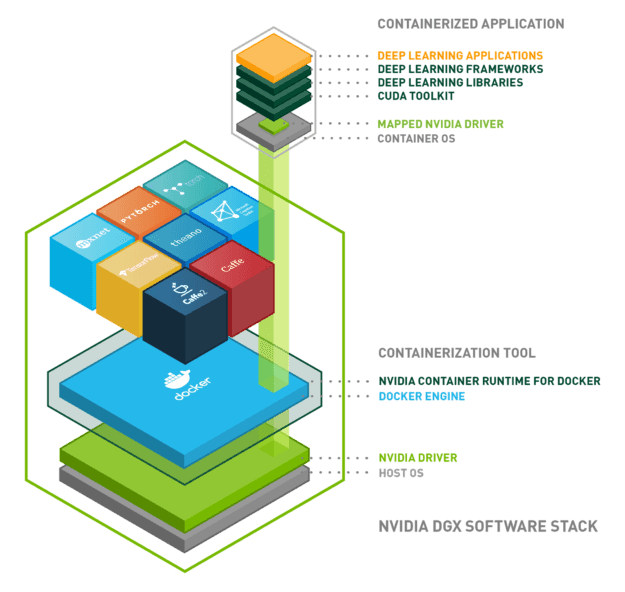

In this article is a diagram to summarize almost everything we have seen:

Conclusion

We have set up an architecture enabling the use of GPU resources from our applications in isolated environments. To summarize, the architecture is composed of the adhering to bricks:

- Running procedure: Linux, Home windows …

- Docker: isolation of the atmosphere using Linux containers

- NVIDIA driver: installation of the driver for the hardware in query

- NVIDIA container runtime: orchestration of the prior three

- Programs on Docker container:

- CUDA

- cuDNN

- cuBLAS

- Tensorflow/Keras

NVIDIA carries on to acquire equipment and libraries about AI systems, with the target of establishing alone as a chief. Other technologies may perhaps complement nvidia-docker or may be much more ideal than nvidia-docker dependent on the use case.